Inside RenownAI: How we're using AI to make Counter-Strike moderation actually fair

RenownAI is our new moderation engine built specifically for Counter-Strike. In this post, we'll walk through why we built it, how it works under the hood, and what it unlocks for fairer matches.

The moderation problem

If you've played Counter-Strike for any length of time, you know the frustration.You're in a match, it's 14-14. Your teammate, who has been silent all game, suddenly decides to force-buy a scout when the rest of the team is saving. He rushes mid, dies instantly, and then types "gg team bad" in chat.

In a traditional moderation system, that player is invisible. He didn't use a slur. He didn't deal team damage to trigger an auto-kick. He just… ruined the game.

We've all seen the flip side, too: You and a friend are banter-trading in chat, having a laugh. A rigid "bad word" filter catches a joke, and suddenly you're slapped with a cooldown for "toxicity" despite everyone in the server having a good time.

This is the core problem with 99% of automated moderation systems: They lack context.

- They see text strings, but they don't see the game.

- They don't hear the voice comms.

- They don't understand why something happened.

When we started planning Season 3, we knew that if we wanted Renown to be the premier place to play CS, we couldn't rely on off-the-shelf moderation tools. We needed something that understood Counter-Strike as well as a human admin does.

So, we built it ourselves.

Introducing RenownAI

RenownAI isn't just a chat filter. It is an intelligence engine trained specifically on Renown match data. Over the last six months, we have fed it millions of interactions to teach it the difference between a bad play and a griefing play, and the difference between trash talk and harassment.Most systems analyze isolated incidents. RenownAI analyzes the entire match as a timeline.

To understand why this matters, let's go back to that example of the player force-buying a Scout:

- A traditional system sees a buy event at best. It means nothing.

- RenownAI sees that the team economy is low. It checks the voice logs and hears teammates calling for a "save". It sees the player ignore the call, force buy, rush mid, and die alone.

This isn't just a "bad play". In the context of the match, it is griefing. By processing thousands of game events every match, including all voice interactions and text chats, the system builds a profile of behavior that human moderators would take a long time to piece together manually.

Context is king: How it works

The core philosophy of RenownAI is that context changes everything.In our testing, we found that players may use language or actions that look bad in isolation but are normal in the heat of the moment. Teammates banter. Friends queueing together might team-damage each other for a laugh in a 12-2 round.

If you build a system that auto-bans based on a list of "bad words" or simple damage thresholds, you end up banning passionate players and missing the real toxicity.

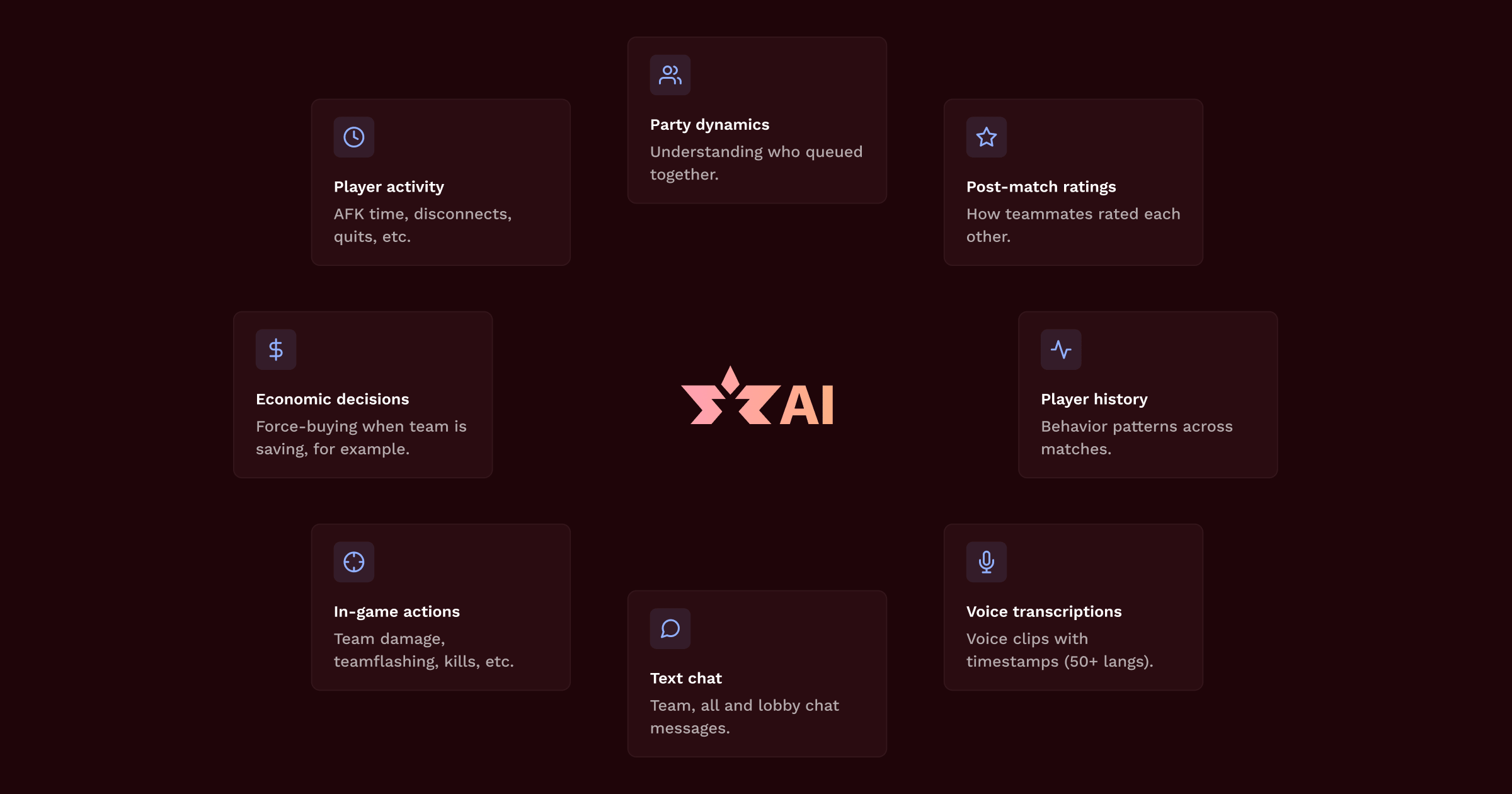

RenownAI ingests data across three main pillars:

- Game events: Teamflashes, AFK time, economy deviations, disconnects, kills, deaths, etc.

- Voice & text: Transcription and sentiment analysis in over 50 languages.

- Party dynamics: Understanding who is queued together.

Your post-match teammate ratings and reports are the first signals RenownAI looks at. From there, it scans the full match timeline and cross-checks every claim against the hard evidence: game events, voice logs, and text logs, to verify whether toxicity or griefing actually happened.

What gets escalated to a human moderator depends on severity, the confidence of the evidence, and your reporter credibility.

- If you spam false reports, your credibility drops, and your future reports carry less weight.

- If you consistently report accurately, your credibility rises, and your reports are prioritized, even when the evidence is less clear.

Your reports tell us where to look. RenownAI confirms what happened.

The human element (and busting myths)

One of the biggest fears players have regarding AI moderation is the idea of the "Robot Overlord" banning people randomly. We want to be very clear about how this workflow operates.RenownAI acts as the prosecutor, not the judge.

Because the system is capable of aggregating so much evidence, it builds a comprehensive "case file". It pulls the specific voice clip where a slur was used. It highlights the exact tick where the team-kill happened. It flags the chat logs leading up to the incident.

Instead of a mod having to watch a 45-minute demo to find why a report was issued, they are presented with a concise, evidence-backed RenownAI report. They can listen to the audio directly in the browser, check the logs, and make a decision in minutes.

This solves two massive problems:

- Speed: We can action bans faster than ever before.

- Fairness: A human always verifies context for severe punishments.

We often hear the myth: "If a 4-stack reports me as a solo player, I'll get auto-banned."

That's not true. RenownAI knows who is queued together. If a 4-stack reports a solo player, the system treats those reports as a single signal source. Furthermore, because the system always looks for evidence from the match itself, false reports from salty stacks are instantly discarded (and also lower their future credibility).

You cannot be banned by RenownAI solely because people clicked the report button; the evidence must exist in the match data.

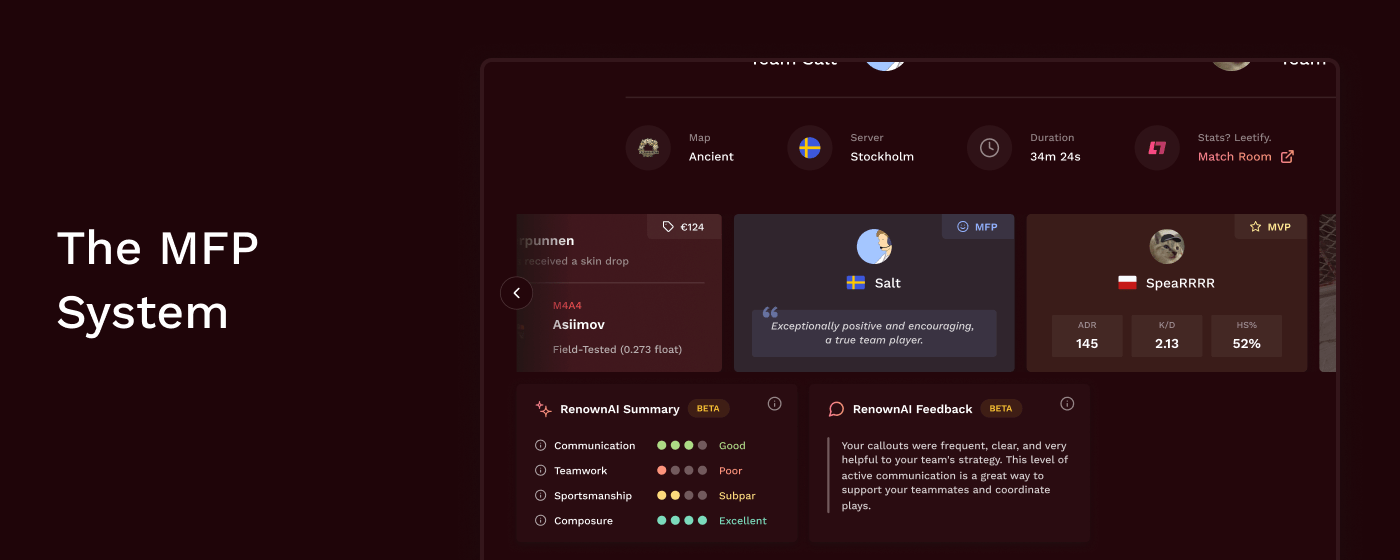

Positive reinforcement: The MFP system

When we designed the Season 3 ecosystem, we realized that traditional systems are entirely negative. They only tell you when you've done something wrong. They never tell you when you're doing something right.

When we designed the Season 3 ecosystem, we realized that traditional systems are entirely negative. They only tell you when you've done something wrong. They never tell you when you're doing something right.If we want to improve match quality, punishments aren't enough. We need to incentivize the behaviors that make CS fun. This is why we built a completely new award, the Most Friendly Player (MFP), on top of the RenownAI engine.

After every match, the system analyzes who contributed the most to the team environment. Who communicated positions clearly? Who kept morale up when you were down 0-5? Who dropped weapons for teammates?

The system awards the MFP to that player. It's a small nod, but it signals that how you play matters.

Additionally, we are now providing Personalized Feedback on your match pages. We break down your behavior into Communication, Teamwork, Sportsmanship, and Composure.

- Getting a low "Teamwork" score? Stop force-buying when the team is saving for the next round.

- Low "Sportsmanship" score? Maybe stop typing "ez" after a close 13-11 win.

This feedback loop gives players a chance to self-correct before they ever hit the penalty threshold.

The future of match quality

We built RenownAI for the teammate who keeps calling strats even when it's 3-11.For the IGL who takes a deep breath instead of flaming a whiff.

For the anchor who holds B alone for the 10th round in a row and still says "nice try" when things fall apart on A.

The system is currently in "Beta", and while it is already incredibly powerful, we know there will be quirks to iron out. The important part is that we have the foundation set with an engine that can see, hear, and understand the game.

Jump into a match, check your feedback, earn your MFPs, and help us keep building the best competitive community in Counter-Strike.

We're just getting started!